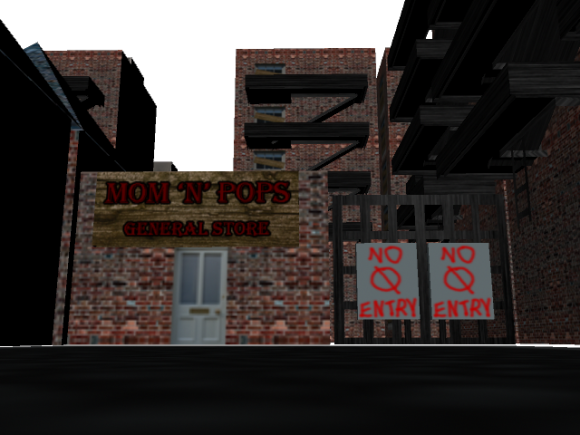

I’ve been playing a lot of God of War III lately and thanks to my Intermediate Computer graphics course, I couldn’t help but consider all the shaders being used. In God of War III, details in terms of texturing and geometry is not just another step in graphics rendering, but one giant leap for the industry in terms of technology.

Programmable pixel shaders add textures and effects that give a whole new dimension to the quality of the final work. It’s a true generational leap, and performance of the new game, in terms of frame-rate, is in the same ballpark as the previous two God of War titles.

In terms of the character creations themselves, concept art and a low-poly mesh from Maya is handed off to the 3D modellers, who create the basic models using a sculpting tool known as Z-Brush. These models are then given detail – painted in via Photoshop – before being passed along the next stages in the art pipeline to the character riggers and animators.

Kratos himself is a very detailed model, it’s interesting to note that the raw polygon count is considerably lower than the 35,000 or so that comprise the in-game model of Drake in Uncharted 2. But it is significantly higher than Kratos on the PS2 when he had only 5,000 polygons. He had about three textures on the PlayStation 2 and I think he has at least 20 textures on him now. The animation data on him is probably about six times as big.

Kratos is a big guy but did you know that given the intricacy of his model, it would take two Playstation 2’s to fit his memory? That’s not even counting all his weapons that he has to alternate between, eat your heart out Link.

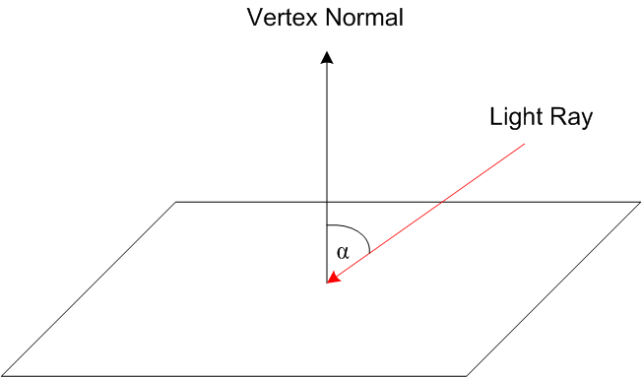

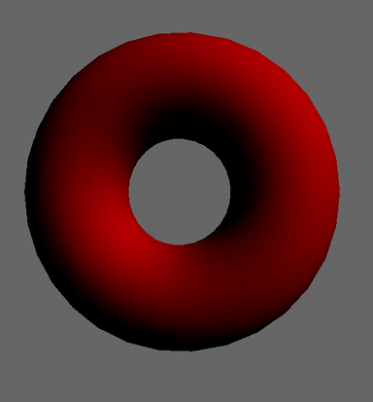

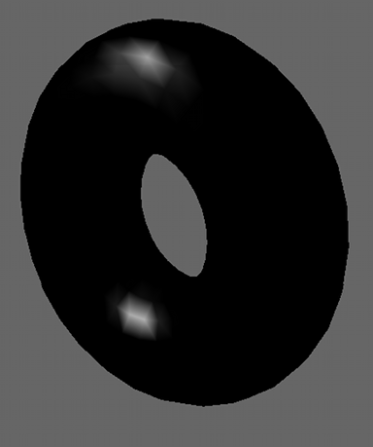

Each character gets a normal, diffuse, specular, gloss (power map), ambient occlusion, and skin shader map. Layered textures were used to create more tiling, and use environment maps where needed.

A new technique known as blended normal mapping adds to the realism of the basic model and hugely enhances the range of animation available. Muscles move convincingly, facial animations convey the hatred and rage of Kratos in a way we’ve never seen before.

The system operates to such a level of realism that wrinkles in the character’s skin are added and taken away as joints within the face of the model are manipulated. The musculature simulation is so accurate that veins literally pop into view on Kratos’s arms as he moves them around.

In terms of character movements, over and above the pre-defined animations created by the team, the God of War technical artists also created secondary animation code. Why hand-animate hair, or a serpent’s tail, when the PS3 itself can mathematically calculate the way it should look? The system’s called Dynamic Simulation; and its effects are subtle but remarkable, accurately generating motion that previously took the animators long man-hours to replicate.

From God of War II to God of War III they’ve used Dynamic Simulation more and more to do more secondary animations on the characters. Before, in previous games, the hair or the cloth would be stiff, it would be modelled into the creatures, but now they actually adding motion to those pieces so you will see hair and cloth moving.”

“Towards the end of the previous game, in collaboration with Jason Minters, I created this dynamic system that uses the Maya hair system to drive a series of joints,” adds technical artist Gary Cavanaugh. “Each of the snakes on the gorgon’s head is independently moving. The animator did not have to individually pose all of these animations but they do have control over the physics… it improves a lot of the workflow for animators.”

The tech art team bridges the gap between artists and coders. The ‘zipper tech’ tool on the left shows how they create animated wounds with belching guts, while the shot on the right shows a bespoke animation rig for the gorgon’s tail.

One of the most crucial elements of the cinematic look of God of War III is derived from the accomplished camerawork. Similar to previous God of War epics – and in contrast to Uncharted 2 – the player actually has very little control over the in-game viewpoint. Instead, Sony Santa Monica has a small team whose job it is to direct the action, similar to a movie’s Director of Photography.

Think about it: so long as the gameplay works, and works well, having scripted camera events ensures that the player gets the most out of the hugely intricate and beautifully designed art that the God of War team has put together. When running from point A to point B, why focus the camera on a piece of ground and wall when instead it can pan back to reveal a beautiful, epic background vista?

Perhaps most astonishingly of all, the final God of War III executable file that sits on that mammoth Blu-ray is just 5.3MB in size – uncompressed, and including SPU binaries – in a project that swallows up a mammoth 35GB of Blu-ray space (40.2GB for the European version with its support for multiple languages).

Another core part of God of War III‘s cinematic look and feel comes from the basic setup of the framebuffer, and the implementation of HDR lighting. Two framebuffer possibilities for HDR on the PlayStation 3 include LogLUV (aka NAO32, used in Uncharted and Heavenly Sword), and RGBM: an alternative setup that has found a home in Uncharted 2 and indeed in God of War III.

The basic technical setups for both formats are covered elsewhere but in terms of the final effect and what it means for the look of the game, the result is a massively expanded colour palette which gifts the artists with a higher-precision range of colours in which to create a unique, stylised and film-like look.

Opting for the RGBM setup over LogLUV means a significant saving in processing, although some precision is lost. The degree of that loss isn’t exactly apparent to the human eye, and we can assume it becomes even less of an issue bearing in mind that the final image is transmitted to your display downscaled over the 24-bit RGB link in the HDMI port.

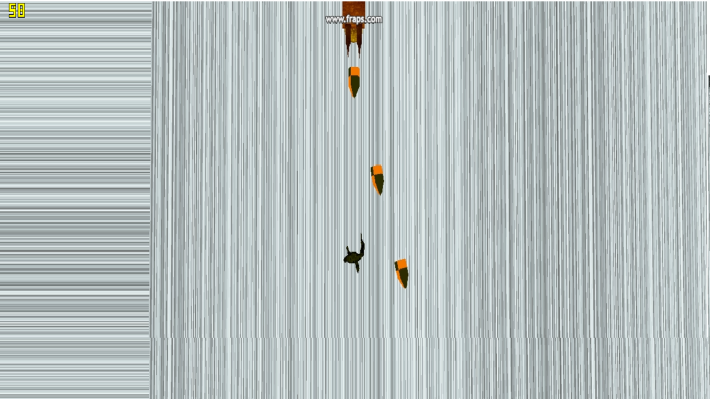

The filmic look of God of War III is boosted via effective motion blur. The shots demonstrate the camera and per-object implementations in the game.

In terms of post-processing effects, the game is given an additional boost in realism thanks to an impressive implementation of motion blur. Superficially, it’s a similar system to that seen in previous technological showcases like Uncharted 2: Among Thieves and Killzone 2, and helps to smooth some of the judder caused by a frame-rate that can vary between anything from 30 frames per second to 60.

Most games that implement motion blur do so just on a “camera” basis – that is, the whole scene is processed – an effect that of variable effectiveness in terms of achieving a realistic look.

According to Sony Santa Monica’s Ken Feldman, motion blur is calculated not just on the camera, but on an individual object and inner object basis too.

God of War’s camera and object motion blur is a subtle but effective contribution to the cinematic look of the game. Here at 30 per cent speed, the effect is more easily open to analysis. Hit the full-screen button for HD, or click on the EGTV link for a larger window.

The basics of the motion blur system effectively mimic what we see on the cinema screen. Movies run at only 24 frames per second, but make it look like it’s smoother. While filming, the shutter of the camera stays open for around 0.04 seconds. During that window of time, movement in the captured image is blurred. It’s that phenomenon that the tech seeks to mimic in God of War III: more cinematic, more realistic.

Initially the game used the RSX chip to carry out a traditional 2x multisampling anti-aliasing effect. This, combined with the game’s lack of high-contrast edges, produced an extremely clean look in last year’s E3 demo. For the final game, the Sony Santa Monica team implemented a solution that goes way beyond that.

MLAA-style edge-smoothing looks absolutely sensational in still shots but the effect often deteriorates with pixel-popping artifacts. In God of War III this only became especially obvious in the scene shown in the bottom right image.

According to director of technology Tim Moss, God of War III worked with the Sony technology group in the UK to produce an edge-smoothing technique for the game that the developers call MLAA, or morphological anti-aliasing. Indeed, Moss’s colleague Christer Ericson took us to task on the specifics of MLAA a few months back in this DF blog post, revealing that the team has put extensive research into this in search of their own solution.

“The core implementation of the anti-aliasing was written by some great SCEE guys in the UK, but came very late in our development cycle making the integration a daunting task,” adds senior staff programmer Ben Diamand.

The specifics of the implementation are still unknown at this time (though Ken Feldman suggests it “goes beyond” the papers Ericson spoke about in the DF piece) but the bottom line is that the final result in God of War III is simply phenomenal: aliasing is all but eliminated and the sub-pixel jitter typically associated with this technique has been massively reduced compared to other implementations we’ve seen. The implementation of MLAA culminates beautifully when it comes to eradicating edge-aliasing within the game.

The custom anti-aliasing solution is also another example of how PlayStation 3 developers are using the Cell CPU as a parallel graphics chip working in tandem with the RSX. The basic theory is all about moving tasks typically performed by the graphics chip over the Cell. Post-processing effects in particular work well being ported across.

The more flexible nature of the CPU means that while such tasks can be more computationally expensive, you are left with a higher-quality result. The increased latency incurred can be reduced by parallelising across multiple SPUs.

In the case of God of War III, frames take between 16ms and 30ms to render. The original 2x multisampling AA solution took a big chunk of rendering time, at 5ms. Now, the MLAA algorithm takes 20ms of CPU time. But it’s running across five SPUs, meaning that overall latency is a mere 4ms. So the final result is actually faster, and that previous 5ms of GPU time can be repurposed for other tasks.

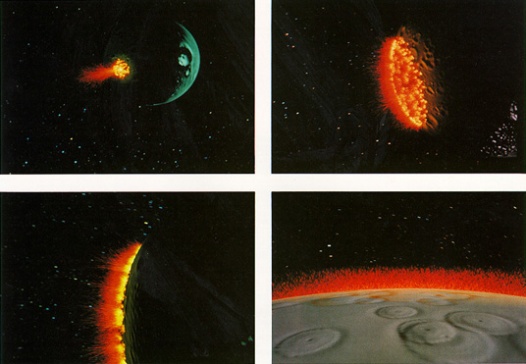

While the detail that the Sony Santa Monica team has put into the characters and environments is clearly immense, it’s the combination with the pure rendering tech that gives the game its state-of-the-art look and feel. The new God of War engine thrives in its handling of dynamic light sources, for example.

God of War III triumphs in handling dynamic lighting, with up to 50 lights per game object. Helios’ head (bottom right) is the most obvious example of the player directly interfacing with dynamic lighting. Dynamic lighting is one of the big features of the game’s engine. It manages to support up to 50 dynamic lights per game object. They are not using a deferred lighting scheme. Our lead programmer What the team did was place lights in Maya and have them update in real-time in the game on the PS3, it’s like being able to paint with lights.

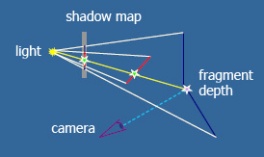

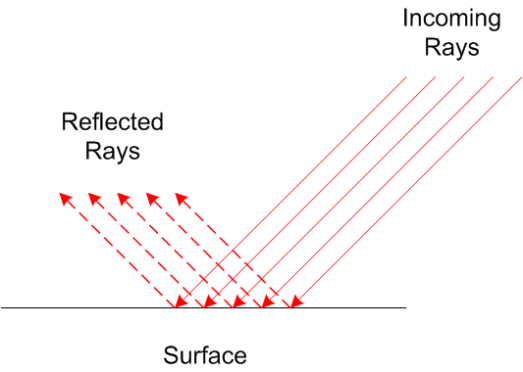

Where there is light, there is a shadow. Or at least there should be. On the majority of videogames, shadowing tech is fairly basic. Producing realistic shadows is computationally expensive, hence we get a range of ugly artifacts as a result: serrated edges that look ugly up close, or cascade shadow maps that transition in quality in stages right before your eyes.

God of War III stands out in this regard simply because you don’t tend to notice the shadows. They’re realistic. The human eye is drawn to elements that stick out like a sore thumb, and that includes shadows. State-of-the-art techniques result in a very natural look. The result is subtle and it works beautifully, thus creating visual feast for players to enjoy as they play a game with graphics that even surpass blockbuster movies.