I went to MIGS this weekend and had a blast, being surrounded by all these games, as well as fellow students, upcoming developers and professionals from major studios is quite frankly a dream come true.

My undisputed favorite part of the presentation by Ben Mattes of Warner Bros. games of Montreal. He talked about making a movie and a game at the same time; in which they speak about their experiences creating the cinematics of Arkham Origins.

We all saw the TV spots and trailers, those CG cutscenes looked so visually amazing, I honestly thought it was a live action movie at first glance.

Naturally the process was very difficult, according to their stories, they had since late last year to create everything which is a very tight schedule. That wasn’t even the worst of it. Given that they were telling a story, they naturally had to follow a script. The problem was the script wasn’t readily available to them from the start as you’d expect. No, the script was written, reviewed and approved in increments for the sake of editing flexibility, which left Mr. Mattes team at a disadvantage with the time schedule. Considering how serious WB & DC are about their character, it was not like WB games could take any liberties of the sort. Anything having to do with the story and characters begun and ended with their property owners, the rest was left to the cinematic cutscene developers.

In order to properly animate the characters of the game, they made extensive use of motion capture and shot everything at a studio with an army of stuntmen and stuntwomen enacting the actions of the characters. Everything from Batman’s martial arts to Joker’s over the top body language to Copperhead’s movements was done with motion capture. On the topic of Copperhead, things like climbing on the walls were simulated with walls and rails that they built. Every movement that required some specific environment, the team built them in order to properly capture the right animations.

Indeed, they put so much effort like you wouldn’t even imagine, and of course it was a difficult task given what resources they had to gather. They had to go through the trouble of casting each motion capture actor to perfectly suit their roles, in particular they had to find a large man in order to play Bane. Developers don’t just get people off the street to do these, in order to be hired to do motion capture, you need to be a credible actor and/or stunt person. I even met one at MIGS who told me this information. Like actors in movies, motion capture actors have schedules that they and the developers need to organize. This was a huge problem for them given the issue with getting a script on time.

There is a faster method to create these cutscenes, an alternative to motion capture is performance capture; which is a recording method that encompasses body motion capture, facial motion capture and voice recording. The problem is as you’d expect, it’s far too expensive.

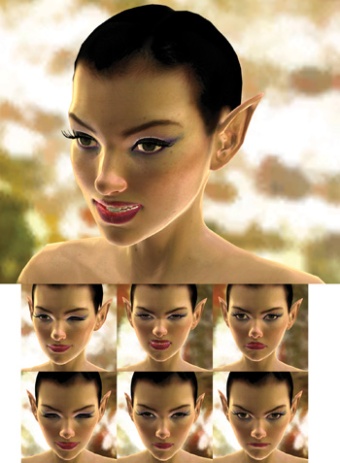

Fortunately the long way proved to be much more ideal in the aesthetics department. With voice acting, they did it separately with expert voice actors such as Troy Barker as Joker. As for the facial rigging, they did that by using blenders, changing the facial expressions manually in maya by interpolating using catmull rom between 9 different expressions.

This ended up working better because they managed to avoid Uncanny Valley and retain the exaggerated expressions of comic book characters.

They captured all these movements with the usage of a virtual camera. But it’s not a traditional virtual camera that’s created in Maya and exported onto the engine. The animators used a portable camera that shot the motion capture set, projecting the objects and animations on a virtual space. Like a regular camera, it’s handled and moved in certain positions by a camera in order to get the exact angle they want. It’s barely different from traditional filmmaking.

Arkham Origins is one of the few games this year that made use of pre-rendered cinematics which is higher quality but takes up more disk space. After all the scenes are shot they take them into the engine and composite them in order to have…..drumroll please…… SHADERS! Adding lighting effects, dust particles and pyrotechnics to create a more lively and realistic environment.

The lengths the animators took to create their cutscenes is no different from how regular films are shot; they hire actors to perform in front of a camera handled by a camera man, they need to follow the script and have to take the scenes and add effects later on in post-production. It’s uncanny how much effort they went through given the amount of obstacles they encountered, and to produce what they did at that caliber is to be commended. I think these cutscenes have better animation than most Pixar movies.

My only disappointment is not enough time to ask him questions, I had tonnes.